Deep Learning Book Series · 2.7 Eigendecomposition

Last update: Mar. 2020

Introduction

We will see some major concepts of linear algebra in this chapter. We will start with defining eigenvectors and eigenvalues. We will develop on the idea that a matrix can be seen as a linear transformation and that applying a matrix on its eigenvectors gives new vectors that have the same direction. Then we will see how to express quadratic equations into matrix form. We will see that the eigendecomposition of the matrix corresponding to a quadratic equation can be used to find the minimum and maximum of this function. As a bonus, we will also see how to visualize linear transformations in Python!

2.7 Eigendecomposition

The eigendecomposition is one form of matrix decomposition. Decomposing a matrix means that we want to find a product of matrices that is equal to the initial matrix. In the case of eigendecomposition, we decompose the initial matrix into the product of its eigenvectors and eigenvalues. Before all, let’s see the link between matrices and linear transformation. Then, you’ll learn what are eigenvectors and eigenvalues.

Matrices as linear transformations

As we have seen in 2.3 with the example of the identity matrix, you can think of matrices as linear transformations. Some matrices will rotate your space, others will rescale it. When we apply a matrix to a vector, we end up with a transformed version of the vector. When we say that we apply the matrix to the vector, it means that we calculate the dot product of the matrix with the vector. We will start with a basic example of this kind of transformation.

Example 1.

A = np.array([[-1, 3], [2, -2]])

A

array([[-1, 3],

[ 2, -2]])

v = np.array([[2], [1]])

v

array([[2],

[1]])

First, let’s create a function plotVectors() to easily plot vectors and visualize transformations:

def plotVectors(vecs, cols, alpha=1):

"""

Plot set of vectors.

Parameters

----------

vecs : array-like

Coordinates of the vectors to plot. Each vectors is in an array. For

instance: [[1, 3], [2, 2]] can be used to plot 2 vectors.

cols : array-like

Colors of the vectors. For instance: ['red', 'blue'] will display the

first vector in red and the second in blue.

alpha : float

Opacity of vectors

Returns:

fig : instance of matplotlib.figure.Figure

The figure of the vectors

"""

plt.figure()

plt.axvline(x=0, color='#A9A9A9', zorder=0)

plt.axhline(y=0, color='#A9A9A9', zorder=0)

for i in range(len(vecs)):

x = np.concatenate([[0,0],vecs[i]])

plt.quiver([x[0]],

[x[1]],

[x[2]],

[x[3]],

angles='xy', scale_units='xy', scale=1, color=cols[i],

alpha=alpha)

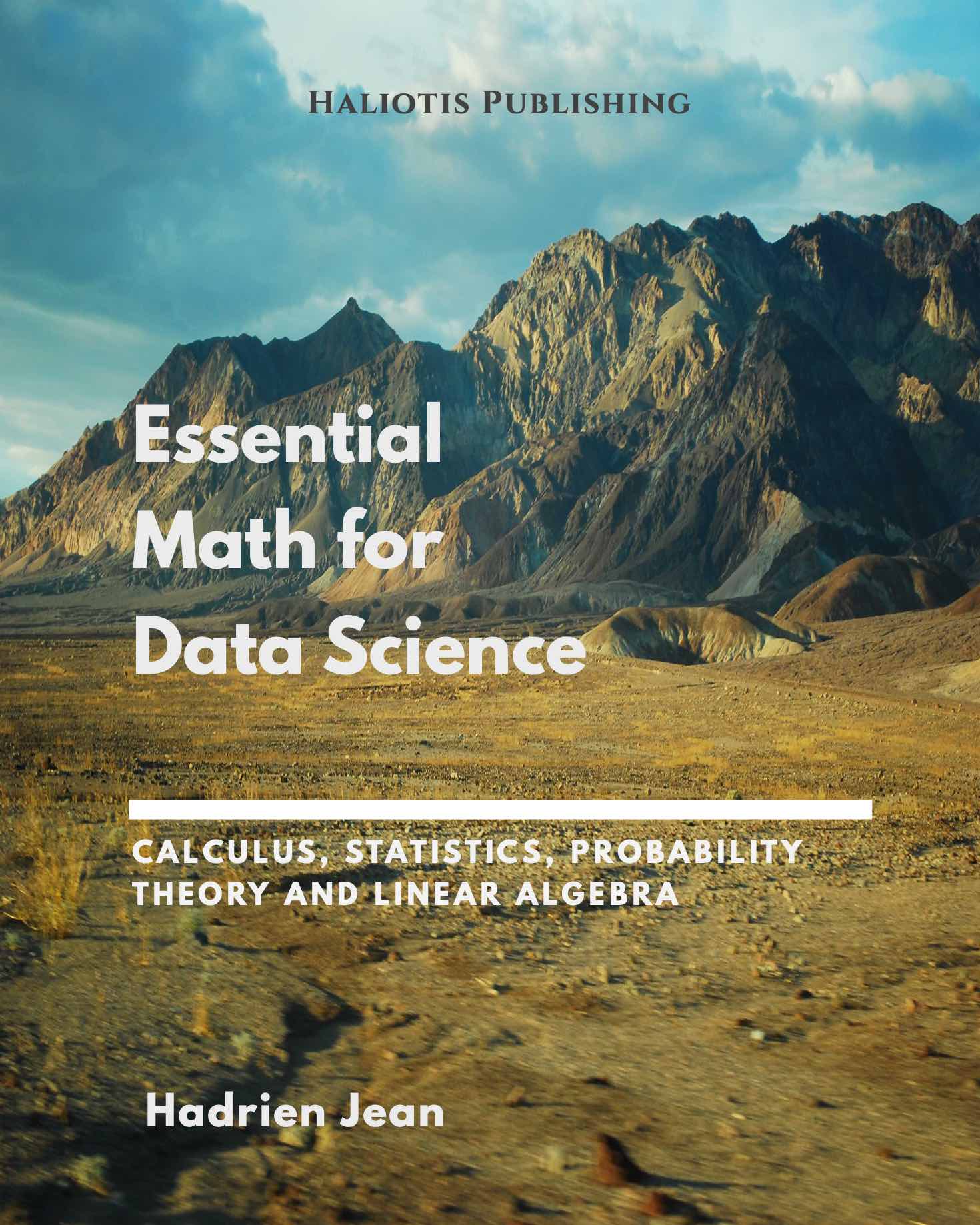

We can now use this function to plot our vector $\bs{v}$:

plotVectors([v.flatten()], cols=['#1190FF'])

plt.ylim(-1, 4)

plt.xlim(-1, 4)

(-1, 4)

A simple vector

A simple vector

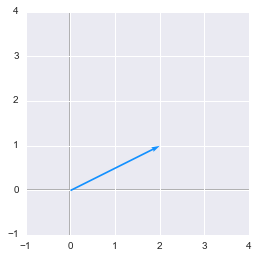

Now, let’s apply the matrix $\bs{A}$ to this vector. We’ll plot the old vector $\bs{v}$ (light blue) and the new one (orange):

Av = A.dot(v)

print(Av)

plotVectors([v.flatten(), Av.flatten()], cols=['#1190FF', '#FF9A13'])

plt.ylim(-1, 4)

plt.xlim(-1, 4)

[[1]

[2]]

(-1, 4)

The vector $\bs{v}$ (blue) and its transformed version (orange)

The vector $\bs{v}$ (blue) and its transformed version (orange)

We can see that applying the matrix $\bs{A}$ has the effect of modifying the vector.

You can think of matrices as linear transformation recipes. Let’s see the case of a very special type of vector: the eigenvectors.

Eigenvectors and eigenvalues

We have seen an example of a vector transformed by a matrix. Now imagine that the transformation of the initial vector gives us a new vector that has the exact same direction. The scale can be different but the direction is the same. Applying the matrix doesn’t change the direction of the vector. This special vector is called an eigenvector of the matrix. We will see that finding the eigenvectors of a matrix can be very useful.

Imagine that the transformation of the initial vector by the matrix gives a new vector with the exact same direction. This vector is called an eigenvector of $\bs{A}$.

This means that $\bs{v}$ is a eigenvector of $\bs{A}$ if $\bs{v}$ and $\bs{Av}$ (the transformed vector) are in the same direction. The output vector is just a scaled version of the input vector. This scalling factor is $\lambda$ which is called the eigenvalue of $\bs{A}$.

Mathematically, we have the following equation:

\(\bs{Av} = \lambda\bs{v}\)

The vector $\bs{Av}$ is the vector $\bs{v}$ transformed by the matrix $\bs{A}$. This transformed vector is a scaled version (scaled by the value $\lambda$) of the initial vector $\bs{v}$.

Example 2.

Let’s $\bs{A}$ be the following matrix:

$ \bs{A}= \begin{bmatrix} 5 & 1\\ 3 & 3 \end{bmatrix} $

We know that one eigenvector of $\bs{A}$ is:

\(\bs{v}= \begin{bmatrix} 1\\\\ 1 \end{bmatrix}\)

We can check that $\bs{Av} = \lambda\bs{v}$:

$ \begin{bmatrix} 5 & 1\\ 3 & 3 \end{bmatrix} \begin{bmatrix} 1\\ 1 \end{bmatrix}=\begin{bmatrix} 6\\ 6 \end{bmatrix} $

We can see that:

\(6\times \begin{bmatrix} 1\\\\ 1 \end{bmatrix} = \begin{bmatrix} 6\\\\ 6 \end{bmatrix}\)

which means that $\bs{v}$ is well an eigenvector of $\bs{A}$. Also, the corresponding eigenvalue is $\lambda=6$.

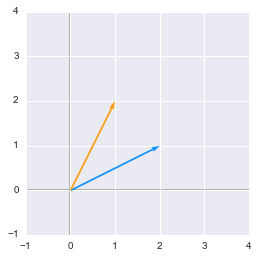

We can represent $\bs{v}$ and $\bs{Av}$ to check if their directions are the same:

A = np.array([[5, 1], [3, 3]])

A

array([[5, 1],

[3, 3]])

v = np.array([[1], [1]])

v

array([[1],

[1]])

Av = A.dot(v)

orange = '#FF9A13'

blue = '#1190FF'

plotVectors([Av.flatten(), v.flatten()], cols=[blue, orange])

plt.ylim(-1, 7)

plt.xlim(-1, 7)

(-1, 7)

An eigenvector of a matrix doesn’t change direction when we apply this matrix

An eigenvector of a matrix doesn’t change direction when we apply this matrix

We can see that their directions are the same!

Another eigenvector of $\bs{A}$ is

\(\bs{v}= \begin{bmatrix} 1\\\\ -3 \end{bmatrix}\)

because

$ \begin{bmatrix} 5 & 1\\ 3 & 3 \end{bmatrix}\begin{bmatrix} 1\\ -3 \end{bmatrix} = \begin{bmatrix} 2\\ -6 \end{bmatrix} $

and

\(2 \times \begin{bmatrix} 1\\\\ -3 \end{bmatrix} = \begin{bmatrix} 2\\\\ -6 \end{bmatrix}\)

So the corresponding eigenvalue is $\lambda=2$.

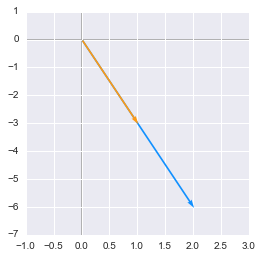

v = np.array([[1], [-3]])

v

array([[ 1],

[-3]])

Av = A.dot(v)

plotVectors([Av.flatten(), v.flatten()], cols=[blue, orange])

plt.ylim(-7, 1)

plt.xlim(-1, 3)

(-1, 3)

Another eigenvector and its transformation

Another eigenvector and its transformation

This example shows that the eigenvectors $\bs{v}$ are vectors that change only in scale when we apply the matrix $\bs{A}$ to them. Here the scales were 6 for the first eigenvector and 2 to the second but $\lambda$ can take any real or even complex value.

Find eigenvalues and eigenvectors in Python

Numpy provides a function returning eigenvectors and eigenvalues (the first array corresponds to the eigenvalues and the second to the eigenvectors concatenated in columns):

(array([ 6., 2.]), array([[ 0.70710678, -0.31622777],

[ 0.70710678, 0.9486833 ]]))

Here a demonstration with the preceding example.

A = np.array([[5, 1], [3, 3]])

A

array([[5, 1],

[3, 3]])

np.linalg.eig(A)

(array([ 6., 2.]), array([[ 0.70710678, -0.31622777],

[ 0.70710678, 0.9486833 ]]))

We can see that the eigenvalues are the same than the ones we used before: 6 and 2 (first array).

The eigenvectors correspond to the columns of the second array. This means that the eigenvector corresponding to $\lambda=6$ is:

\(\begin{bmatrix} 0.70710678\\\\ 0.70710678 \end{bmatrix}\)

The eigenvector corresponding to $\lambda=2$ is:

\(\begin{bmatrix} -0.31622777\\\\ 0.9486833 \end{bmatrix}\)

The eigenvectors look different because they have not necessarly the same scaling than the ones we gave in the example. We can easily see that the first corresponds to a scaled version of our $\begin{bmatrix} 1\

1 \end{bmatrix}$. But the same property stands. We still have $\bs{Av} = \lambda\bs{v}$:

$ \begin{bmatrix} 5 & 1\\ 3 & 3 \end{bmatrix} \begin{bmatrix} 0.70710678\\ 0.70710678 \end{bmatrix}= \begin{bmatrix} 4.24264069\\ 4.24264069 \end{bmatrix} $

With $0.70710678 \times 6 = 4.24264069$. So there are an infinite number of eigenvectors corresponding to the eigenvalue $6$. They are equivalent because we are interested by their directions.

For the second eigenvector we can check that it corresponds to a scaled version of $\begin{bmatrix} 1\

-3 \end{bmatrix}$. We can draw these vectors and see if they have the same direction.

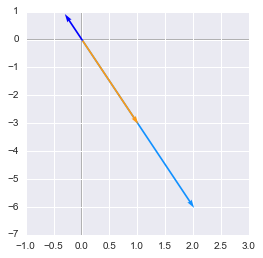

v = np.array([[1], [-3]])

Av = A.dot(v)

v_np = [-0.31622777, 0.9486833]

plotVectors([Av.flatten(), v.flatten(), v_np], cols=[blue, orange, 'blue'])

plt.ylim(-7, 1)

plt.xlim(-1, 3)

(-1, 3)

Eigenvectors found in numpy have identical directions

Eigenvectors found in numpy have identical directions

We can see that the vector found with Numpy (in dark blue) is a scaled version of our preceding $\begin{bmatrix} 1\

-3 \end{bmatrix}$.

Rescaled vectors

As we saw it with numpy, if $\bs{v}$ is an eigenvector of $\bs{A}$, then any rescaled vector $s\bs{v}$ is also an eigenvector of $\bs{A}$. The eigenvalue of the rescaled vector is the same.

Let’s try to rescale \[\bs{v}= \begin{bmatrix} 1\\\\ -3 \end{bmatrix}\]

from our preceding example.

For instance,

\(\bs{3v}= \begin{bmatrix} 3\\\\ -9 \end{bmatrix}\)

So we have:

$ \begin{aligned} \begin{bmatrix} 5 & 1\\ 3 & 3 \end{bmatrix} \begin{bmatrix} 3\\ -9 \end{bmatrix} &= \begin{bmatrix} 6\\ -18 \end{bmatrix}\\ &= 2 \times \begin{bmatrix} 3\\ -9 \end{bmatrix} \end{aligned} $

This corresponds to $\bs{A}\times 3\bs{v} = \lambda \times 3\bs{v}$ and the eigenvalue is still $\lambda=2$.

Concatenating eigenvalues and eigenvectors

Now that we have an idea of what eigenvectors and eigenvalues are, we can see how it can be used to decompose a matrix. All eigenvectors of a matrix $\bs{A}$ can be concatenated in a matrix with each column corresponding to each eigenvector (like in the second array return by np.linalg.eig(A)):

$ \bs{V}= \begin{bmatrix} 1 & 1\\ 1 & -3 \end{bmatrix} $

The first column \[\begin{bmatrix} 1\\\\ 1 \end{bmatrix}\]

corresponds to $\lambda=6$ and the second \[\begin{bmatrix} 1\\\\ -3 \end{bmatrix}\]

to $\lambda=2$.

The vector $\bs{\lambda}$ can be created from all eigenvalues:

\(\bs{\lambda}= \begin{bmatrix} 6\\\\ 2 \end{bmatrix}\)

Then the eigendecomposition is given by

\(\bs{A}=\bs{V}\cdot diag(\bs{\lambda}) \cdot \bs{V}^{-1}\)

We can decompose the matrix $\bs{A}$ with eigenvectors and eigenvalues. It is done with: $\bs{A}=\bs{V}\cdot diag(\bs{\lambda}) \cdot \bs{V}^{-1}$

$diag(\bs{v})$ is a diagonal matrix (see 2.6) containing all the eigenvalues. Continuing with our example we have

$ \bs{V}=\begin{bmatrix} 1 & 1\\ 1 & -3 \end{bmatrix} $

The diagonal matrix is all zeros except the diagonal that is our vector $\bs{\lambda}$.

$ diag(\bs{v})= \begin{bmatrix} 6 & 0\\ 0 & 2 \end{bmatrix} $

The inverse matrix of $\bs{V}$ can be calculated with numpy:

V = np.array([[1, 1], [1, -3]])

V

array([[ 1, 1],

[ 1, -3]])

V_inv = np.linalg.inv(V)

V_inv

array([[ 0.75, 0.25],

[ 0.25, -0.25]])

So let’s plug

$ \bs{V}^{-1}=\begin{bmatrix} 0.75 & 0.25\\ 0.25 & -0.25 \end{bmatrix} $

into our equation:

$ \begin{aligned} &\bs{V}\cdot diag(\bs{\lambda}) \cdot \bs{V}^{-1}\\ &= \begin{bmatrix} 1 & 1\\ 1 & -3 \end{bmatrix} \begin{bmatrix} 6 & 0\\ 0 & 2 \end{bmatrix} \begin{bmatrix} 0.75 & 0.25\\ 0.25 & -0.25 \end{bmatrix} \end{aligned} $

If we do the dot product of the first two matrices we have:

$ \begin{bmatrix} 1 & 1\\ 1 & -3 \end{bmatrix} \begin{bmatrix} 6 & 0\\ 0 & 2 \end{bmatrix} = \begin{bmatrix} 6 & 2\\ 6 & -6 \end{bmatrix} $

So if we replace into the equation:

$ \begin{aligned} &\begin{bmatrix} 6 & 2\\ 6 & -6 \end{bmatrix} \begin{bmatrix} 0.75 & 0.25\\ 0.25 & -0.25 \end{bmatrix}\\ &= \begin{bmatrix} 5 & 1\\ 3 & 3 \end{bmatrix}= \bs{A} \end{aligned} $

Let’s check our result with Python:

lambdas = np.diag([6, 2])

lambdas

array([[6, 0],

[0, 2]])

V.dot(lambdas).dot(V_inv)

array([[ 5., 1.],

[ 3., 3.]])

That confirms our previous calculation.

Real symmetric matrix

In the case of real symmetric matrices (more details about symmetric matrices in 2.6), the eigendecomposition can be expressed as

\(\bs{A} = \bs{Q}\Lambda \bs{Q}^\text{T}\)

where $\bs{Q}$ is the matrix with eigenvectors as columns and $\Lambda$ is $diag(\lambda)$.

Example 3.

$ \bs{A}=\begin{bmatrix} 6 & 2\\ 2 & 3 \end{bmatrix} $

This matrix is symmetric because $\bs{A}=\bs{A}^\text{T}$. Its eigenvectors are:

$ \bs{Q}= \begin{bmatrix} 0.89442719 & -0.4472136\\ 0.4472136 & 0.89442719 \end{bmatrix} $

and its eigenvalues put in a diagonal matrix gives:

$ \bs{\Lambda}= \begin{bmatrix} 7 & 0\\ 0 & 2 \end{bmatrix} $

So let’s begin with $\bs{Q\Lambda}$:

$ \begin{aligned} \bs{Q\Lambda}&= \begin{bmatrix} 0.89442719 & -0.4472136\\ 0.4472136 & 0.89442719 \end{bmatrix} \begin{bmatrix} 7 & 0\\ 0 & 2 \end{bmatrix}\\ &= \begin{bmatrix} 0.89442719 \times 7 & -0.4472136\times 2\\ 0.4472136 \times 7 & 0.89442719\times 2 \end{bmatrix}\\ &= \begin{bmatrix} 6.26099033 & -0.8944272\\ 3.1304952 & 1.78885438 \end{bmatrix} \end{aligned} $

with:

$ \bs{Q}^\text{T}= \begin{bmatrix} 0.89442719 & 0.4472136\\ -0.4472136 & 0.89442719 \end{bmatrix} $

So we have:

$ \begin{aligned} \bs{Q\Lambda} \bs{Q}^\text{T}&= \begin{bmatrix} 6.26099033 & -0.8944272\\ 3.1304952 & 1.78885438 \end{bmatrix}\\ &\cdot \begin{bmatrix} 0.89442719 & 0.4472136\\ -0.4472136 & 0.89442719 \end{bmatrix}\\ &= \begin{bmatrix} 6 & 2\\ 2 & 3 \end{bmatrix} \end{aligned} $

It works! For that reason, it can useful to use symmetric matrices! Let’s do it now with linalg from Numpy:

A = np.array([[6, 2], [2, 3]])

A

array([[6, 2],

[2, 3]])

eigVals, eigVecs = np.linalg.eig(A)

eigVecs

array([[ 0.89442719, -0.4472136 ],

[ 0.4472136 , 0.89442719]])

eigVals = np.diag(eigVals)

eigVals

array([[ 7., 0.],

[ 0., 2.]])

eigVecs.dot(eigVals).dot(eigVecs.T)

array([[ 6., 2.],

[ 2., 3.]])

We can see that the result corresponds to our initial matrix.

Quadratic form to matrix form

Eigendecomposition can be used to optimize quadratic functions. We will see that when $\bs{x}$ takes the values of an eigenvector, $f(\bs{x})$ takes the value of its corresponding eigenvalue.

When $\bs{x}$ takes the values of an eigenvector, $f(\bs{x})$ takes the value of its corresponding eigenvalue.

We will see in the following points how we can show that with different methods.

Let’s have the following quadratic equation:

\(f(\bs{x}) = ax_1^2 +(b+c)x_1x_2 + dx_2^2\)

These quadratic forms can be generated by matrices:

$ \begin{aligned} f(\bs{x}) &= \begin{bmatrix} x_1 & x_2 \end{bmatrix}\begin{bmatrix} a & b\\ c & d \end{bmatrix}\begin{bmatrix} x_1\\ x_2 \end{bmatrix}\\ &= \bs{x^\text{T}Ax} \end{aligned} $

with:

$ \bs{x} = \begin{bmatrix} x_1\\ x_2 \end{bmatrix} $

and

$ \bs{A}=\begin{bmatrix} a & b\\ c & d \end{bmatrix} $

We call them matrix forms. This form is useful to do various things on the quadratic equation like constrained optimization (see bellow).

Quadratic equations can be expressed under the matrix form

If you look at the relation between these forms you can see that $a$ gives you the number of $x_1^2$, $(b + c)$ the number of $x_1x_2$ and $d$ the number of $x_2^2$. This means that the same quadratic form can be obtained from infinite number of matrices $\bs{A}$ by changing $b$ and $c$ while preserving their sum.

Example 4.

\(\bs{x} = \begin{bmatrix} x_1\\\\ x_2 \end{bmatrix}\)

and

$ \bs{A}=\begin{bmatrix} 2 & 4\\ 2 & 5 \end{bmatrix} $

gives the following quadratic form:

$ \begin{aligned} &2x_1^2 + (4+2)x_1x_2 + 5x_2^2\\ &=2x_1^2 + 6x_1x_2 + 5x_2^2 \end{aligned} $

but if:

$ \bs{A}=\begin{bmatrix} 2 & -3\\ 9 & 5 \end{bmatrix} $

we still have the quadratic same form:

$ \begin{aligned} &2x_1^2 + (-3+9)x_1x_2 + 5x_2^2\\ &=2x_1^2 + 6x_1x_2 + 5x_2^2 \end{aligned} $

Example 5

For this example, we will go from the matrix form to the quadratic form using a symmetric matrix $\bs{A}$. Let’s use the matrix of the example 3.

$ \bs{x} = \begin{bmatrix} x_1\\ x_2 \end{bmatrix} $

and

$ \bs{A}=\begin{bmatrix} 6 & 2\\ 2 & 3 \end{bmatrix} $

$ \begin{aligned} \bs{x^\text{T}Ax}&= \begin{bmatrix} x_1 & x_2 \end{bmatrix} \begin{bmatrix} 6 & 2\\ 2 & 3 \end{bmatrix} \begin{bmatrix} x_1\\ x_2 \end{bmatrix}\\ &= \begin{bmatrix} x_1 & x_2 \end{bmatrix} \begin{bmatrix} 6 x_1 + 2 x_2\\ 2 x_1 + 3 x_2 \end{bmatrix}\\ &= x_1(6 x_1 + 2 x_2) + x_2(2 x_1 + 3 x_2)\\ &= 6 x_1^2 + 4 x_1x_2 + 3 x_2^2 \end{aligned} $

Our quadratic equation is thus:

\(6 x_1^2 + 4 x_1x_2 + 3 x_2^2\)

Note

If $\bs{A}$ is a diagonal matrix (all 0 except the diagonal), the quadratic form of $\bs{x^\text{T}Ax}$ will have no cross term. Take the following matrix form:

$ \bs{A}=\begin{bmatrix} a & b\\ c & d \end{bmatrix} $

If $\bs{A}$ is diagonal, then $b$ and $c$ are 0 and since $f(\bs{x}) = ax_1^2 +(b+c)x_1x_2 + dx_2^2$ there is no cross term. A quadratic form without cross term is called diagonal form since it comes from a diagonal matrix.

Change of variable

A change of variable (or linear substitution) simply means that we replace a variable by another one. We will see that it can be used to remove the cross terms in our quadratic equation. Without the cross term, it will then be easier to characterize the function and eventually optimize it (i.e finding its maximum or minimum).

With the quadratic form

Example 6.

Let’s take again our previous quadratic form:

\(\bs{x^\text{T}Ax} = 6 x_1^2 + 4 x_1x_2 + 3 x_2^2\)

The change of variable will concern $x_1$ and $x_2$. We can replace $x_1$ with any combination of $y_1$ and $y_2$ and $x_2$ with any combination $y_1$ and $y_2$. We will of course end up with a new equation. The nice thing is that we can find a specific substitution that will lead to a simplification of our statement. Specifically, it can be used to get rid of the cross term (in our example: $4 x_1x_2$). We will see later why it is interesting.

Actually, the right substitution is given by the eigenvectors of the matrix used to generate the quadratic form. Let’s recall that the matrix form of our equation is:

\(\bs{x} = \begin{bmatrix} x_1\\\\ x_2 \end{bmatrix}\)

and

$ \bs{A}=\begin{bmatrix} 6 & 2\\ 2 & 3 \end{bmatrix} $

and that the eigenvectors of $\bs{A}$ are:

$ \begin{bmatrix} 0.89442719 & -0.4472136\\ 0.4472136 & 0.89442719 \end{bmatrix} $

With the purpose of simplification, we can replace these values with:

$ \begin{bmatrix} \frac{2}{\sqrt{5}} & -\frac{1}{\sqrt{5}}\\ \frac{1}{\sqrt{5}} & \frac{2}{\sqrt{5}} \end{bmatrix} = \frac{1}{\sqrt{5}} \begin{bmatrix} 2 & -1\\ 1 & 2 \end{bmatrix} $

So our first eigenvector is:

$ \frac{1}{\sqrt{5}} \begin{bmatrix} 2\\ 1 \end{bmatrix} $

and our second eigenvector is:

\(\frac{1}{\sqrt{5}} \begin{bmatrix} -1\\\\ 2 \end{bmatrix}\)

The change of variable will lead to:

$ \begin{aligned} \begin{bmatrix} x_1\\ x_2 \end{bmatrix} &= \frac{1}{\sqrt{5}} \begin{bmatrix} 2 & -1\\ 1 & 2 \end{bmatrix} \begin{bmatrix} y_1\\ y_2 \end{bmatrix}\\ &= \frac{1}{\sqrt{5}} \begin{bmatrix} 2y_1 - y_2\\ y_1 + 2y_2 \end{bmatrix} \end{aligned} $

so we have

\(\begin{cases} x_1 = \frac{1}{\sqrt{5}}(2y_1 - y_2)\\\\ x_2 = \frac{1}{\sqrt{5}}(y_1 + 2y_2) \end{cases}\)

So far so good! Let’s replace that in our example:

$ \begin{aligned} \bs{x^\text{T}Ax} &=6 x_1^2 + 4 x_1x_2 + 3 x_2^2\\ &=6 [\frac{1}{\sqrt{5}}(2y_1 - y_2)]^2 + 4 [\frac{1}{\sqrt{5}}(2y_1 - y_2)\frac{1}{\sqrt{5}}(y_1 + 2y_2)] + 3 [\frac{1}{\sqrt{5}}(y_1 + 2y_2)]^2\\ &= \frac{1}{5}[6 (2y_1 - y_2)^2 + 4 (2y_1 - y_2)(y_1 + 2y_2) + 3 (y_1 + 2y_2)^2]\\ &= \frac{1}{5}[6 (4y_1^2 - 4y_1y_2 + y_2^2) + 4 (2y_1^2 + 4y_1y_2 - y_1y_2 - 2y_2^2) + 3 (y_1^2 + 4y_1y_2 + 4y_2^2)]\\ &= \frac{1}{5}(24y_1^2 - 24y_1y_2 + 6y_2^2 + 8y_1^2 + 16y_1y_2 - 4y_1y_2 - 8y_2^2 + 3y_1^2 + 12y_1y_2 + 12y_2^2)\\ &= \frac{1}{5}(35y_1^2 + 10y_2^2)\\ &= 7y_1^2 + 2y_2^2 \end{aligned} $

That’s great! Our new equation doesn’t have any cross terms!

With the Principal Axes Theorem

Actually there is a simpler way to do the change of variable. We can stay in the matrix form. Recall that we start with the form:

\(f(\bs{x})=\bs{x^\text{T}Ax}\)

The linear substitution can be wrote in these terms. We want replace the variables $\bs{x}$ by $\bs{y}$ that relates by:

\(\bs{x}=P\bs{y}\)

We want to find $P$ such as our new equation (after the change of variable) doesn’t contain the cross terms. The first step is to replace that in the first equation:

$ \begin{aligned} \bs{x^\text{T}Ax} &= (\bs{Py})^\text{T}\bs{A}(\bs{Py})\\ &= \bs{y}^\text{T}(\bs{P}^\text{T}\bs{AP})\bs{y} \end{aligned} $

Can you see the how to transform the left hand side ($\bs{x}$) into the right hand side ($\bs{y}$)? The substitution is done by replacing $\bs{A}$ with $\bs{P^\text{T}AP}$. We also know that $\bs{A}$ is symmetric and thus that there is a diagonal matrix $\bs{D}$ containing the eigenvectors of $\bs{A}$ and such as $\bs{D}=\bs{P}^\text{T}\bs{AP}$. We thus end up with:

\(\bs{x^\text{T}Ax}=\bs{y^\text{T}\bs{D} y}\)

All of this implies that we can use $\bs{D}$ to simplify our quadratic equation and remove the cross terms. If you remember from example 2 we know that the eigenvalues of $\bs{A}$ are:

$ \bs{D}= \begin{bmatrix} 7 & 0\\ 0 & 2 \end{bmatrix} $

$ \begin{aligned} \bs{x^\text{T}Ax} &= \bs{y^\text{T}\bs{D} y} \\ &= \bs{y}^\text{T} \begin{bmatrix} 7 & 0 \\ 0 & 2 \end{bmatrix} \bs{y} \\ &= \begin{bmatrix} y_1 & y_2 \end{bmatrix} \begin{bmatrix} 7 & 0 \\ 0 & 2 \end{bmatrix} \begin{bmatrix} y_1 \\ y_2 \end{bmatrix}\\ &= \begin{bmatrix} 7y_1 +0y_2 & 0y_1 + 2y_2 \end{bmatrix} \begin{bmatrix} y_1 \\ y_2 \end{bmatrix} \\ &= 7y_1^2 + 2y_2^2 \end{aligned} $

That’s nice! If you look back to the change of variable that we have done in the quadratic form, you will see that we have found the same values!

This form (without cross-term) is called the principal axes form.

Summary

To summarise, the principal axes form can be found with

\(\bs{x^\text{T}Ax} = \lambda_1y_1^2 + \lambda_2y_2^2\)

where $\lambda_1$ is the eigenvalue corresponding to the first eigenvector and $\lambda_2$ the eigenvalue corresponding to the second eigenvector (second column of $\bs{x}$).

Finding f(x) with eigendecomposition

We will see that there is a way to find $f(\bs{x})$ with eigenvectors and eigenvalues when $\bs{x}$ is a unit vector.

Let’s start from:

\(f(\bs{x}) =\bs{x^\text{T}Ax}\)

We know that if $\bs{x}$ is an eigenvector of $\bs{A}$ and $\lambda$ the corresponding eigenvalue, then $ \bs{Ax}=\lambda \bs{x} $. By replacing the term in the last equation we have:

\(f(\bs{x}) =\bs{x^\text{T}\lambda x} = \bs{x^\text{T}x}\lambda\)

Since $\bs{x}$ is a unit vector, $\norm{\bs{x}}_2=1$ and $\bs{x^\text{T}x}=1$ (cf. 2.5 Norms). We end up with

\(f(\bs{x}) = \lambda\)

This is a usefull property. If $\bs{x}$ is an eigenvector of $\bs{A}$, $ f(\bs{x}) =\bs{x^\text{T}Ax}$ will take the value of the corresponding eigenvalue. We can see that this is working only if the euclidean norm of $\bs{x}$ is 1 (i.e $\bs{x}$ is a unit vector).

Example 7

This example will show that $f(\bs{x}) = \lambda$. Let’s take again the last example, the eigenvectors of $\bs{A}$ were

$ \bs{Q}= \begin{bmatrix} 0.89442719 & -0.4472136 \\ 0.4472136 & 0.89442719 \end{bmatrix} $

and the eigenvalues

$ \bs{\Lambda}= \begin{bmatrix} 7 & 0 \\ 0 & 2 \end{bmatrix} $

So if:

$ \bs{x}=\begin{bmatrix} 0.89442719 & 0.4472136 \end{bmatrix} $

$f(\bs{x})$ should be equal to 7. Let’s check that’s true.

$ \begin{aligned} f(\bs{x}) &= 6 x_1^2 + 4 x_1x_2 + 3 x_2^2 \\ &= 6\times 0.89442719^2 + 4\times 0.89442719\times 0.4472136 + 3 \times 0.4472136^2 \\ &= 7 \end{aligned} $

In the same way, if $\bs{x}=\begin{bmatrix} -0.4472136 & 0.89442719 \end{bmatrix}$, $f(\bs{x})$ should be equal to 2.

$ \begin{aligned} f(\bs{x}) &= 6 x_1^2 + 4 x_1x_2 + 3 x_2^2 \\ &= 6\times -0.4472136^2 + 4\times -0.4472136\times 0.89442719 + 3 \times 0.89442719^2 \\ &= 2 \end{aligned} $

Quadratic form optimization

Depending to the context, optimizing a function means finding its maximum or its minimum. It is for instance widely used to minimize the error of cost functions in machine learning.

Here we will see how eigendecomposition can be used to optimize quadratic functions and why this can be done easily without cross terms. The difficulty is that we want a constrained optimization, that is to find the minimum or the maximum of the function for $f(\bs{x})$ being a unit vector.

Example 8.

We want to optimize:

\(f(\bs{x}) =\bs{x^\text{T}Ax} \textrm{ subject to }||\bs{x}||_2= 1\)

In our last example we ended up with:

\(f(\bs{x}) = 7y_1^2 + 2y_2^2\)

And the constraint of $\bs{x}$ being a unit vector imply:

\(||\bs{x}||_2 = 1 \Leftrightarrow x_1^2 + x_2^2 = 1\)

We can also show that $\bs{y}$ has to be a unit vector if it is the case for $\bs{x}$. Recall first that $\bs{x}=\bs{Py}$:

$ \begin{aligned} ||\bs{x}||^2 &= \bs{x^\text{T}x} \\ &= (\bs{Py})^\text{T}(\bs{Py}) \\ &= \bs{y^\text{T}P^\text{T}Py} \\ &= \bs{y^\text{T}y} = ||\bs{y}||^2 \end{aligned} $

So $\norm{\bs{x}}^2 = \norm{\bs{y}}^2 = 1$ and thus $y_1^2 + y_2^2 = 1$

Since $y_1^2$ and $y_2^2$ cannot be negative because they are squared values, we can be sure that $2y_2^2\leq7y_2^2$. Hence:

$ \begin{aligned} f(\bs{x}) &= 7y_1^2 + 2y_2^2 \\ &\leq 7y_1^2 + 7y_2^2 \\ &\leq 7(y_1^2+y_2^2) \\ &\leq 7 \end{aligned} $

This means that the maximum value of $f(\bs{x})$ is 7.

The same way can lead to find the minimum of $f(\bs{x})$. $7y_1^2\geq2y_1^2$ and:

$ \begin{aligned} f(\bs{x}) &= 7y_1^2 + 2y_2^2 \\ &\geq 2y_1^2 + 2y_2^2 \\ &\geq 2(y_1^2+y_2^2) \\ &\geq 2 \end{aligned} $

And the minimum of $f(\bs{x})$ is 2.

Summary

We can note that the minimum of $f(\bs{x})$ is the minimum eigenvalue of the corresponding matrix $\bs{A}$. Another useful fact is that this value is obtained when $\bs{x}$ takes the value of the corresponding eigenvector (check back the preceding paragraph). In that way, $f(\bs{x})=7$ when $\bs{x}=\begin{bmatrix}0.89442719 & 0.4472136\end{bmatrix}$. This shows how useful are the eigenvalues and eigenvector in this kind of constrained optimization.

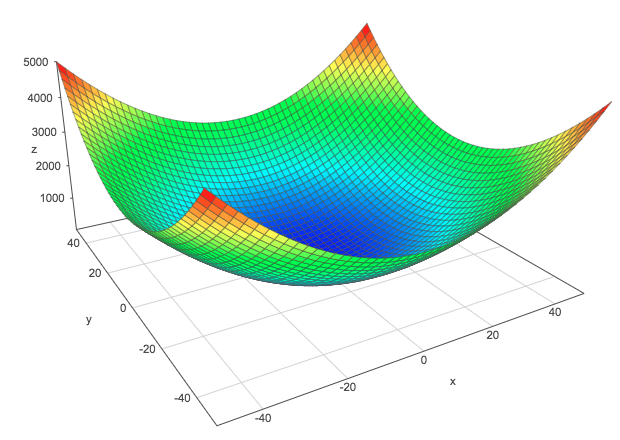

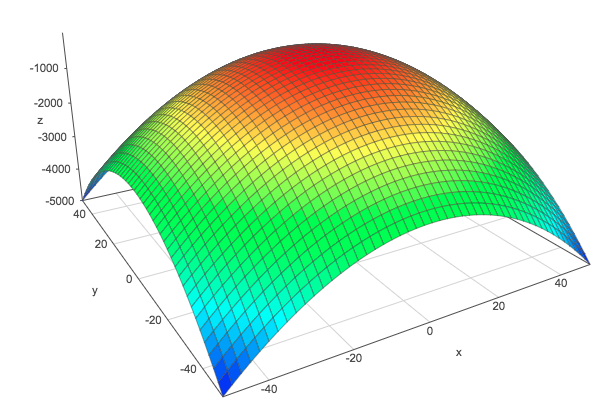

Graphical views

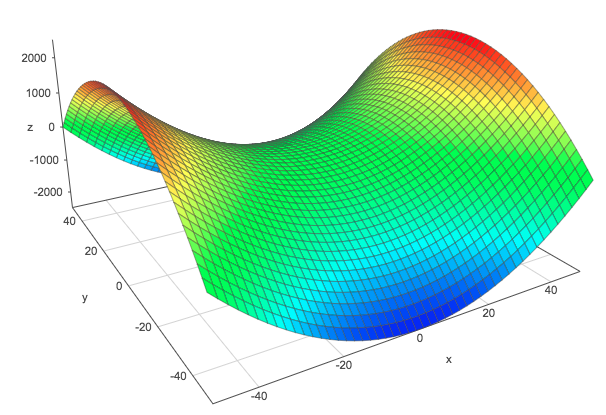

We saw that the quadratic functions $f(\bs{x}) = ax_1^2 +2bx_1x_2 + cx_2^2$ can be represented by the symmetric matrix $\bs{A}$:

$ \bs{A}=\begin{bmatrix} a & b \\ b & c \end{bmatrix} $

Graphically, these functions can take one of three general shapes (click on the links to go to the Surface Plotter and move the shapes):

| 1.Positive-definite form | 2.Negative-definite form | 3.Indefinite form |

|---|---|---|

|  |  |

With the constraints that $\bs{x}$ is a unit vector, the minimum of the function $f(\bs{x})$ corresponds to the smallest eigenvalue and is obtained with its corresponding eigenvector. The maximum corresponds to the biggest eigenvalue and is obtained with its corresponding eigenvector.

Conclusion

We have seen a lot of things in this chapter. We saw that linear algebra can be used to solve a variety of mathematical problems and more specifically that eigendecomposition is a powerful tool! However, it cannot be used for non square matrices. In the next chapter, we will see the Singular Value Decomposition (SVD) which is another way of decomposing matrices. The advantage of the SVD is that you can use it also with non-square matrices.

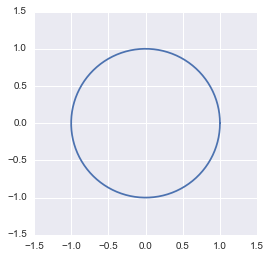

BONUS: visualizing linear transformations

We can see the effect of eigenvectors and eigenvalues in linear transformation. We will see first how linear transformation works. Linear transformation is a mapping between an input vector and an output vector. Different operations like projection or rotation are linear transformations. Every linear transformations can be though as applying a matrix on the input vector. We will see the meaning of this graphically. For that purpose, let’s start by drawing the set of unit vectors (they are all vectors with a norm of 1).

t = np.linspace(0, 2*np.pi, 100)

x = np.cos(t)

y = np.sin(t)

plt.figure()

plt.plot(x, y)

plt.xlim(-1.5, 1.5)

plt.ylim(-1.5, 1.5)

plt.show()

Unit circle

Unit circle

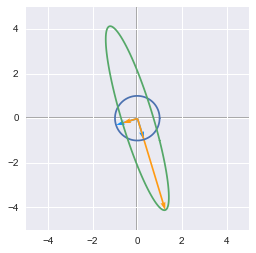

Then, we will transform each of these points by applying a matrix $\bs{A}$. This is the goal of the function bellow that takes a matrix as input and will draw

- the origin set of unit vectors

- the transformed set of unit vectors

- the eigenvectors

- the eigenvectors scalled by their eigenvalues

def linearTransformation(transformMatrix):

orange = '#FF9A13'

blue = '#1190FF'

# Create original set of unit vectors

t = np.linspace(0, 2*np.pi, 100)

x = np.cos(t)

y = np.sin(t)

# Calculate eigenvectors and eigenvalues

eigVecs = np.linalg.eig(transformMatrix)[1]

eigVals = np.diag(np.linalg.eig(transformMatrix)[0])

# Create vectors of 0 to store new transformed values

newX = np.zeros(len(x))

newY = np.zeros(len(x))

for i in range(len(x)):

unitVector_i = np.array([x[i], y[i]])

# Apply the matrix to the vector

newXY = transformMatrix.dot(unitVector_i)

newX[i] = newXY[0]

newY[i] = newXY[1]

plotVectors([eigVecs[:,0], eigVecs[:,1]],

cols=[blue, blue])

plt.plot(x, y)

plotVectors([eigVals[0,0]*eigVecs[:,0], eigVals[1,1]*eigVecs[:,1]],

cols=[orange, orange])

plt.plot(newX, newY)

plt.xlim(-5, 5)

plt.ylim(-5, 5)

plt.show()

A = np.array([[1,-1], [-1, 4]])

linearTransformation(A)

The unit circle and its transformation by the matrix A. The vectors are the eigenvectors of A.

The unit circle and its transformation by the matrix A. The vectors are the eigenvectors of A.

We can see the unit circle in dark blue, the non scaled eigenvectors in light blue, the transformed unit circle in green and the scaled eigenvectors in yellow.

It is worth noting that the eigenvectors are orthogonal here because the matrix is symmetric. Let’s try with a non-symmetric matrix:

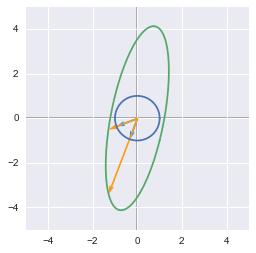

A = np.array([[1,1], [-1, 4]])

linearTransformation(A)

The unit circle and its transformation by the matrix A. The vectors are the eigenvectors of A (with A non symmetric).

The unit circle and its transformation by the matrix A. The vectors are the eigenvectors of A (with A non symmetric).

In this case, the eigenvectors are not orthogonal!

References

Videos of Gilbert Strang

Quadratic forms

Eigenvectors

Linear transformations

Feel free to drop me an email or a comment. The syllabus of this series can be found in the introduction post. All the notebooks can be found on Github.

This content is part of a series following the chapter 2 on linear algebra from the Deep Learning Book by Goodfellow, I., Bengio, Y., and Courville, A. (2016). It aims to provide intuitions/drawings/python code on mathematical theories and is constructed as my understanding of these concepts. You can check the syllabus in the introduction post.